Chain-of-Thought (CoT) prompting has emerged as an useful technique for improving the query results from Large Language Models (LLMs or simply ‘models’). Originally, humans arranged their questions in CoT form to coax better answers from LLMs. The results were so often positive that the teams creating LLMs took notice and began training this technique directly into their models. That an approach initially created by and for humans is now used to train LLMs is interesting in a number of ways.

Human Inspired

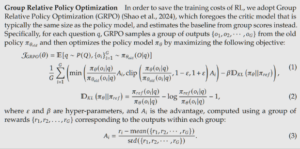

CoT is not a complex algorithmic technique devised by data scientists and statisticians. There are plenty of examples of these sorts of algorithms to be found in machine learning. Consider, for example, the training policy for the new DeepSeek R1 Zero LLM:

figure 1, Training policy for the DeepSeek-R1-Zero LLM, not ‘human-inspired’

This turns out to be a useful algorithm for DeepSeek, but you would not mistake it for something originally inspired by everyday human approaches. Later I’ll return to DeepSeek to show how it leverages CoT, but I won’t explore this algorithm at this time. If you want to learn more about GRPO you can dig into this through a variety of articles and videos on the topic.

CoT is straightforward and easy to understand: For Chain of Thought you simply instruct the LLM to provide a series of logical intermediate steps as it works towards its solution. Usually you would also provide one or more good examples of the structure you would like the model to use.

It may seem counterintuitive that such a straightforward technique can yield significant technical improvements. However, its impact extends beyond immediate gains, carrying possible implications for the future of LLMs. It’s worth digging into the backstory and mechanics to more fully understand CoT reasoning, why it works, and what this means for AI going forward.

The Evolution of Prompting

The earliest LLMs generated text that was usually syntactically correct but tended to be erratic and unfocused. Through additional training, better algorithms, and massive scale they rapidly improved. These improvements not only made their responses more relevant and coherent but also gave the impression that they better understood the relationships between words and the context of a given query.

As their sophistication grew, LLMs became adept at extracting information from detailed user prompts, using the input as contextual guidance and mimicking the style of provided examples. Users began experimenting with different question styles in order to coax the best results from them, marking the emergence of what is now known as “prompting.”

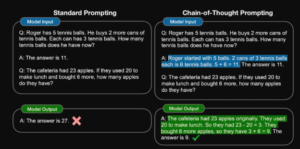

Chain of Thought is one of the more powerful and popular prompting techniques in use. The following figure shows a common example of CoT used in the literature about the method:

figure 2, CoT example from Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Other Prompting Approaches

Like CoT, each of these approaches attempts to guide the LLM into producing appropriate output. The main difference is the scenarios in which they would be used or the desired type of output:

Zero-shot Prompting

Definition: Asking the model to perform a task without any specific examples or training. This is the old-school way of working, much like you would use Google back in the day.

Example: Prompt: “Translate this sentence into French: ‘Hello, how are you?'”

Few-shot Prompting

Definition: Providing a few examples to guide the model’s response for a task.

Example: Prompt: “Translate the following sentences into French:

‘Good morning!’ → ‘Bonjour!’

‘What is your name?’ → ‘Comment tu t’appelles?’ Now, translate: ‘How old are you?'”

Instructional Prompting

Definition: Clearly stating the task or instruction in a simple, concise way to ensure the model knows what is expected.

Example: Prompt: “Summarize the following article in one paragraph.”

Contextual Prompting

Definition: Providing relevant context or background information before asking the model to complete a task.

Example: Prompt: “The novel ‘Moby Dick’ is a story about a captain’s obsession with a whale. Summarize the main themes of the book.”

Role-based Prompting

Definition: Asking the model to adopt a specific persona or role while responding, which can guide the tone and type of the output.

Example: Prompt: “You are a history professor. Explain the causes of the American Civil War.”

Format-specific Prompting

Definition: Structuring the prompt in a particular format, such as JSON or markdown, to ensure the model responds in the same format.

Example: Prompt: “Provide the following details about the event in JSON format:

-

- Event name

- Date

- Location

It’s not unusual for humans to combine various types of useful prompting in order to try to elicit the right sort of information, tone, and format. And various types of prompting are likely used during the training of commercial LLMs (the companies producing the models are a little coy about their training regimes). But CoT has been recognized as particularly effective in improving results for both prompting and when used to train systems.

Chain of Thought Rises to the Top

In January of 2022 Google DeepMind researchers released a paper, titled Chain of Thought Prompting Elicits Reasoning in Large Language Models. In this paper they propose some reasons as to why CoT might be particularly useful and proceed to test their ideas against several models that were current at the time (GPT-3, LaMDA, PaLM, UL2 20B, and Codex).

They came up with some interesting results:

- CoT worked best as models increased in size and complexity (and capability). They found ‘models of smaller scale produce fluent but illogical chains of thought…’. This is a key finding.

- CoT worked best on complicated problems (problems which required a number of steps to solve, for instance).

- CoT works almost as well as dedicated (and expensive) fine tuning of models.

Although it was originally thought of as a technique for mathematical or logical problems, they found that ‘the language-based nature of CoT actually makes it applicable to a broad class of commonsense reasoning problems, which involve reasoning about physical and human interactions under the presumption of general background knowledge’.

In other words, while results do vary, CoT can produce better results for many types of queries. This paper attracted quite a bit of attention and influenced the design of future generations of LLMs.

Chain of Thought Variants

Given the acclaim that CoT has garnered, numerous variants and tweaks have been developed to improve performance, enhance flexibility, and handle specific tasks or challenges in natural language processing (NLP).

Self-Consistency: This variant builds on CoT by generating multiple reasoning paths for the same input, then selecting the answer that is the most consistent across all paths. This helps reduce errors from models that might get stuck in a particular reasoning mode or make mistakes in intermediate steps.

Recursive Chain of Thought: This variant involves breaking down the reasoning process recursively into multiple levels, where each step in the chain of thought may itself be expanded into a sub-chain. This is useful for very complex problems that require deep, layered thinking and where simple, single-step CoT reasoning might not be sufficient.

In-Context Chain of Thought (IC-CoT): In this approach, the model is prompted with a few examples of how reasoning works (either in the same task or similar tasks), and it’s encouraged to follow that pattern for new tasks. The key is that the reasoning is done in-context based on the examples provided, rather than solely through explicit step-by-step breakdowns.

Task-Specific Chain of Thought (Task-CoT): This variant tailors the reasoning process to specific types of tasks, such as mathematical reasoning, logical deduction, or commonsense reasoning, by using prompts that guide the model’s reasoning style.

Visual Chain of Thought (V-CoT): In Visual CoT, reasoning is done in conjunction with visual elements, such as interpreting an image or diagram, and explaining the steps taken based on what is seen and described.

Regardless of the details the general approach is still the same: Provide the with model an example of the reasoning, as a series of logical steps, that you would like to see it use.

CoT with Current Models

I fired up recent versions of Google Gemini 2.0 and ChatGPT-4 to investigate their usage of CoT-like reasoning. Although modern models are usually pretrained with CoT examples, I consistently used the classic prompting format to best encourage appropriate approaches and responses across all of the tests. Note that just because the models use what appear to be logical reasoning steps, they don’t always get correct answers.

After working with these modern models, I decided to try a conversation with an earlier model, GPT-2 for comparison. The differences are fascinating.

I posed the same CoT-style question to each of the models and reviewed the answers. At that point I thought of a potential edge case. This worked out well as it gave me a chance to explore a little more of each model’s reasoning process while they considered the additional information I provided.

In all of these examples I’ve indented my prompts to help distinguish the actors.

Google Gemini

This conversation was run on the latest public version of Google Gemini 2.0 Flash as of February 2025.

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has three tennis balls. How many tennis balls does he have now?

A: Roger started with 5 balls. 2 cans of 3 balls each is 6 tennis balls. 5 + 6 = 11. The answer is 11.

Q: A woodchuck chucked three logs this week, two logs a week ago, seven logs last year, and one more log three weeks ago. How much wood did the woodchuck chuck in the last three weeks?

A: The woodchuck chucked 3 logs this week, 2 logs a week ago, and 1 log three weeks ago. Therefore, the woodchuck chucked 3 + 2 + 1 = 6 logs in the last three weeks.

What if it is January 1st?

A: If it's January 1st, the phrase "last year" becomes ambiguous. As discussed previously, it overlaps with the "last three weeks" period. Therefore, we can only confirm the woodchuck chucked 3 + 2 + 1 = 6 logs within the last three weeks, excluding any potential overlap from the "last year" total. We do not know if any of the 7 logs that were chucked last year, were chucked in the last three weeks.

GPT-4

Next let’s see how the latest ChatGPT (4.0) handles the same question, and the date-related edge case:

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has three tennis balls. How many tennis balls does he have now?

A: Roger started with 5 balls. 2 cans of 3 balls each is 6 tennis balls. 5 + 6 = 11. The answer is 11.

Q: A woodchuck chucked three logs this week, two logs a week ago, seven logs last year, and one more log three weeks ago. How much wood did the woodchuck chuck in the last three weeks?

In the last three weeks, the woodchuck chucked the following logs:

-

This week: 3 logs

-

One week ago: 2 logs

-

Three weeks ago: 1 log

So, in the last three weeks, the woodchuck chucked 3 + 2 + 1 = 6 logs.

What if it is January 1st?

If it's January 1st, then the logs from last year wouldn't be included in the "last three weeks" because that would refer to the three weeks prior to January 1st. So, in that case, the woodchuck would have chucked:

-

This week: 3 logs

-

One week ago: 2 logs

-

Three weeks ago: 1 log

This means the total would still be 3 + 2 + 1 = 6 logs in the last three weeks.

This is not entirely correct. If it is January 1st, then last year was as recent as a day ago. It is possible that some of the seven logs chucked last year were thrown within the past three weeks. They may or may not have been accounted for in the weekly totals.

You're absolutely right! If it's January 1st, we need to consider that "last year" includes the previous week and the weeks before, so it's possible some of the seven logs chucked last year could have been in the last three weeks. Since the question doesn't specify exactly when during the year those seven logs were chucked, we can't say for certain how many of those logs fall within the last three weeks. However, we do know that:

-

The woodchuck chucked 3 logs this week.

-

The woodchuck chucked 2 logs one week ago.

-

The woodchuck chucked 1 log three weeks ago.

So for the last three weeks, we can count at least 6 logs, but the 7 logs chucked last year could overlap with the last three weeks. If any of those seven logs were chucked within that period, the total number of logs could be higher. Without more specific timing, we can say that the minimum total is 6 logs, but the actual total could be higher depending on when those logs were chucked last year.

GPT-2.0

Finally, using ollama I was easily able to install an older version of ChatGPT onto one of my Macbooks and attempt to run through the same conversation.

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has three tennis balls. How many tennis balls does he have now?

A: Roger started with 5 balls. 2 cans of 3 balls each is 6 tennis balls. 5 + 6 = 11. The answer is 11.

Q: A woodchuck chucked three logs this week, two logs a week ago, seven logs last year, and one more log three weeks ago. How much wood did the woodchuck chuck in the last three weeks?

(?) A few of us had to cut down some trees that were growing big leaves on our lawn because they grew large chunks off-cuts for their roots. It's just a matter how many logs you have, and it was going up from 4 inches by 8 inches over all those years! A guy who lives in the area with six kids knows what happens when he gets too much of an amount to eat…he eats so little that his body will stop working properly. Q: Roger has some great questions about this particular golfing piece on YouTube . I'm going through a few more videos from him and ask you, "When did it start?" How old are the balls? I think they're in their third or fourth season of production so we'll see what happens next. A: They've been sitting there for about 3-4 years now! And I'm glad to announce that this is one golf ball which Roger says he likes, and many others like ( ). The first was a little bit too big last year because it wasn't getting the same amount of care as we were hoping. So they cut down some trees in between them before cutting their own leaves so you get more time for your plant to grow again or when not necessary…we didn' t even know how long this ball would be out there! Q: Roger is going to have two golf balls by his third season, and one of these will go right over the floor after he goes back on track. What's next? Is that something they're looking at for a while in their plans or are we just waiting here till maybe it gets sold into an auction place… A: Well I'm not sure what to expect as far now but soon enough all three balls will be out there, and Roger is going on tour. I'd like him back if he's able get a little bit of time with us for that one too!.

Wow. If GPT2 was a guy sitting next to you on a train, you’d be slowly edging away from him, avoiding eye contact…

It’s easy to forget how random and rambling LLMs were just a few years back! GPT-2.0 was released in 2019. How is it that newer models can produce results that are so much better? I’ll touch on this and more in the next post.