As I wrote in the first segment of this blog, Chain of Thought (CoT) was originally a human prompting technique that proved so useful that it has been integrated into modern LLMs.

Inspiration for algorithms has come from humans, animals, nature, and other sources in the past. Scientists and engineers studied various approaches, techniques, and examples they encountered, then determined how to best approximate the functionality in their favorite programming language.

But CoT has not been introduced into models by coding the technique in to a program. Instead it is integrated into the models by training (teaching) the systems using numerous positive examples. This is similar to how you would teach humans, and reflects an improvement in the learning ability of LLMs.

An Emergent Ability of Model Scale

In the seminal paper I previously referred to, Chain of Thought Prompting Elicits Reasoning in Large Language Models, CoT only works well with models of sufficient scale and complexity (the paper describes this as ‘an emergent ability of model scale’).

So, to be clear, CoT is not responsible for all of or even most of the improvements you saw in the first two test I presented (Google Gemini and GPT-4). The technique only works well because of the rapid increase of model parameter size, training volume, algorithm improvements, and system speed. CoT attempted with the smaller, less trained, and less sophisticated GPT-2, was not particularly impressive.

Tremendous effort has gone into improving these models to the point where they can now leverage the information provided by CoT type prompts to produce better results. To give you an idea of how much training and effort has taken place, consider the training data that modern LLMs use.

Trillions of Tokens

When dealing with LLMs the size of training data is often described in terms of tokens, which are words or parts of words, numbers, and other symbols. This is because source text is preprocessed into tokens so that the models can train on a series of numbers, rather than character strings.

For instance, many systems might break ‘unhappiness’ to be three parts: ‘un’, ’happi’, ‘ness’, each of which would get a unique numeric value. When ingested in this form, systems can more efficiently handle the data and more easily determine the relationships between words and parts of words.

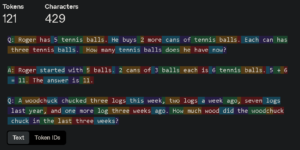

As another example, consider the question I presented to the various LLMs. As tokenized by the GPT-4 tokenizer the whole query consists of 87 words and 429 characters, which translate to 121 tokens.

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has three tennis balls. How many tennis balls does he have now?

A: Roger started with 5 balls. 2 cans of 3 balls each is 6 tennis balls. 5 + 6 = 11. The answer is 11.

Q: A woodchuck chucked three logs this week, two logs a week ago, seven logs last year, and one more log three weeks ago. How much wood did the woodchuck chuck in the last three weeks?

figure 1, Tokenization of my Woodchuck example.

GPT-2 was trained on approximately 8 million web pages. Each page has an average of around 2000 tokens, so that means that the total size of training data for GPT-2 was about 16 billion tokens.

GPT-4 (mistakenly shown as GTP-4 in this otherwise excellent reference on training data) was trained on approximately 5 trillion words, or about 6.5 trillion tokens. This is quite a bit more than its older sibling, and is one factor that helps to explain the significantly better performance of the newer model.

For comparison, this same reference suggests that a human might be exposed to as many as 200 million words (around 266 million tokens) by age 20. If this is accurate then GPT-4 was trained on all the words that 25,000 humans might encounter in their first twenty years!

Training Data

Are LLMs as smart as a twenty year old? I would argue that they are not, and that the comparison above misses one key consideration: The vast majority of ‘data’ that a twenty year old human ‘trains’ on is not in word or token form! The visual, auditory, olfactory, touch, and taste senses we possess allow us to process and contextualize far more information than text alone. We may never read as much as an LLM, but we still have a big edge in overall information intake.

But based on the emergent ability concept, it appears that modern models actually gain increasing advantage and understanding from additional training data, rather than hitting a point of diminishing returns. In this way their learning rate can be seen to increase instead of leveling off. Researchers estimate the stock of human-generated public and private text and audio (and other materials) is vastly greater than that which has currently, and relatively easily, been accessed, so there is still quite a bit more room for LLMs to train, learn, and improve.

Context Is King

In addition to the increasing amounts of raw data on which LLMs are trained, their developers have dramatically increased their ability to consider the wider context of text surrounding a particular word.

LLMs are probability machines, trained (as mentioned in the previous section) on a really vast collection of books, articles, webpages, social media, emails, papers, and anything else computers can process. So they build up an excellent idea of the probability of every word in a language. They use these probabilities to figure out which word to generate next in response to input queries.

Instead of generating predictable sequences by always choosing the overall most probable word—resulting in phrases like ‘the the the…’—modern models use context to guide their output. They emphasize the influence of adjacent tokens, while also maintaining awareness of the wider textual environment.

Of note here is that the ‘wider environment’ of context being considered has dramatically expanded over the generations of LLMs.

- The GPT-2 context window was 1024 tokens

- The GPT-4 Turbo context window is 128,000 tokens, about 300 pages of text

Imagine, for example, that an LLM is asked to produce a book report. GPT-2 would struggle to produce a cohesive summary of a book of more than a few pages. GPT-4 can consider all of the words in an entire 300 page book, producing a more insightful report.

The larger context windows have two practical implications:

- When building up their understanding of words and relationships between them during training, models can leverage a much larger range of relevant material to subtly influence the results.

- When responding to user queries these models can consider very long conversations and quite a bit of background materials in order to generate results that appear more focused.

The vast increase in training data, enhanced contextual awareness, and advancements in algorithms (which I won’t even dive into here) have elevated LLMs to a level where techniques like CoT prompting can be recognized, replicated, and leveraged for significant improvements.

Learning Like a Human

Some AI training techniques used by LLMs and other types of AI models already resemble methods used by humans. In particular, Supervised Learning, and Reinforcement Learning from Human Feedback (RLHF) both share some similarity.

Supervised Learning

In its simplest form, Supervised Learning consists of providing a model with input/output pairs (such as a question and a correct answer). Some automatic mechanism is used to to provide feedback which the training system can use to improve the model. This is similar to the way a human might study with flash cards, but, of course, computers do this at incredible speeds. This works well for the types of question and knowledge that have unambiguous answers.

Reinforcement Learning from Human Feedback

RLHF puts a human in the loop. Instead of the model producing answers that are judged by an automated training system, a human ‘scores’ possibly many answers. Using this guidance the training system can tweak the model to more often produce results that are, as judged by a human, correct. This type of training is far slower and more expensive, but helps the computer to align with human approaches, values, ethics, and preferences. Of course it can also introduce the biases of the humans who are evaluating the answers.

The Key Difference

Chain of Thought training for LLMs is actually a specific application of supervised learning—but with a key twist. Instead of simply training on input-output pairs (give this answer when you see this question), CoT training explicitly teaches models to use the technique of breaking down reasoning into intermediate steps before arriving at a final answer. We may have already become a bit blasé about the ability of AI to learn facts and information from training or human input, but the idea that modern models can mimic (if not truly understand) new techniques from us should still impress!

No Panacea

Although modern LLMs can learn to leverage CoT, this does not mean that they are AGIs or that the technique is a panacea. As the examples I provided quickly demonstrated, even a logical, structured approach to problem solving does not guarantee correct results. One particularly interesting study attempts to determine what types of queries to which CoT can best be applied. Their conclusion is that other techniques, such as external references, interactivity and fine tuning may prove more useful in many cases.

More specifically, there are well known situations where CoT struggles or doesn’t provide the desired improvements:

- CoT is not always effective in tasks that require a deep understanding of the world or commonsense reasoning.

Example: In questions like “Why do humans need sleep?”, the model may not be able to generate meaningful chains of thought.

- CoT can be overkill for simple tasks where the solution is clear and can be reached in a single step.

Example: For a simple math question like “What is 2 + 3?”applying CoT might result in an overly complicated breakdown and make the model more prone to error.

- Chain of Thought reasoning is grounded in logical steps, which may not work well in situations that require subjective interpretation or context-dependent judgment.

Example: In creative tasks such as “What makes a good movie?” CoT might attempt to break down the reasoning into steps like “What is a plot?”, “What makes a character compelling?”, but fail to come to a compelling conclusion.

- Chain of Thought reasoning assumes that there is a coherent structure to the task, but noisy or poorly structured inputs can lead to misleading or incorrect intermediate steps.

Example: If a model is given a poorly written or ambiguous CoT reasoning might produce a convoluted set of steps that lead the model down a wrong path. - When dealing with contradictory information, CoT might break down the problem into multiple conflicting reasoning steps.

Example: In tasks involving paradoxes or highly contradictory facts, the chain of thought may spiral into confusion.

While Chain of Thought reasoning is a powerful tool for structured, multi-step tasks, it does not work well in situations that demand non-linear thinking, subjectivity, or creativity. It’s best applied on tasks where there is a clear logical structure and the problem benefits from explicit reasoning steps.

All of this emphasizes that the most important aspect of CoT is not that it is the one and only way to get improved results from LLMs–it clearly is not. The interesting part is that it is a technique (not just a fact) that can be taught to models which they can use in a range of situations to improve their performance.

In the next post I’ll run my sample conversation through a hot new (as of early 2025) LLM, DeepSeek, to see an example of the CoT technique taken to more extreme levels. What happens when you really emphasize this technique?