In the past two posts I have been digging deep into the Chain of Thought (CoT) prompting technique for improving responses from LLMs. Originally this was devised by LLM users seeking to improve the results returned from models, by convincing the models to mimic a structured approach to problem solving. This approach works very well in logic-centric problems, and adds some value across a range of queries. It has proven to be useful enough that most models now pretraining their systems with CoT examples.

This technique alone was not effective until models gained enough power and sophistication in order to understand and benefit from this human-style training (this was described as ‘an emergent ability of model scale’). LLMs gaining an increased ability to understand and learn/mimic human inspired techniques may reflect an inflection point in their development.

With all of this in mind, I wanted to look at DeepSeek, a newer LLM that is attracting quite a bit of attention.

DeepSeek

Shortly before I began this article, a new LLM called DeepSeek was released. As it strongly emphasizes the CoT approach, I decided to try it out along with the other models I have been testing. The DeepSeek developers are claiming excellence performance on many types of math, logic, and word problems, in part based upon their usage of CoT.

A number of bloggers have expressed concerns about both the biases built into DeepSeek, and their terms and conditions. So instead of running my tests on their public service, I ran the 24 billion parameter version on my personal M1 Max system, again using ollama. This also helps me to justify having such a powerful personal machine 🙂

Using the same prompt I presented to Gemini and ChatGPT it should be clear just how much DeepSeek relies on CoT. I’ll forgive you if you skim through the following output, but at least glance at it to get a feel for the difference between this particular models and the others I have presented.

DeepSeek vs. The Woodchuck

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has three tennis balls. How many tennis balls does he have now?

A: Roger started with 5 balls. 2 cans of 3 balls each is 6 tennis balls. 5 + 6 = 11. The answer is 11.

Q: A woodchuck chucked three logs this week, two logs a week ago, seven logs last year, and one more log three weeks ago. How much wood did the woodchuck chuck in the last three weeks?

<think> First, identify all the logs the woodchuck chucked in the specified time frame. This week, the woodchuck chucked 3 logs. Two weeks ago, it chucked 2 logs. Three weeks ago, it chucked 1 log. There's no information about logs chucked last year, so we'll exclude that from our calculation. Add the number of logs chucked over these three weeks: 3 + 2 + 1 = 6. Therefore, the woodchuck chucked a total of 6 logs in the last three weeks. </think> Let's determine how many logs the woodchuck chucked in the last three weeks by considering the information provided:

-

**This week:** The woodchuck chucked **3 logs**.

-

**Two weeks ago:** It chucked **2 logs**.

-

**Three weeks ago:** It chucked **1 log**.

To find the total number of logs chucked in the last three weeks, add up the logs from each of these periods:

\[

3 \text{ (this week)} + 2 \text{ (two weeks ago)} + 1 \text{ (three weeks ago)} = 6

\]

Therefore, the woodchuck chucked a total of **6** logs in the last three weeks.

\boxed{6}

The woodchuck Chucked a total of 6 logs in the last three weeks.

**Step-by-Step Explanation:**

-

**Identify Relevant Time Frames:** We are to calculate the number of logs Chucked in the last three weeks. This includes:

- This week - Two weeks ago - Three weeks ago

-

**List Logs Chucked During These Periods:**

- This week: 3 logs - Two weeks ago: 2 logs - Three weeks ago: 1 log

-

**Add the Logs Together:**

- 3 (this week) + 2 (two weeks ago) + 1 (three weeks ago) = 6 logs

-

**Conclusion:** The woodchuck chucked a total of 6 logs in the last three weeks.

**Answer:** The woodchuck Chucked a total of 6 logs in the last three weeks.

What if today is January 1st?

<think> Alright, let's tackle this problem step by step. So, we're dealing with a woodchuck that has been chucking logs over different periods. The question provides information about the number of logs chucked in various time frames: - **Three weeks ago:** 1 log - **Two weeks ago:** 2 logs - **This week (last week):** 3 logs Additionally, there's a mention of **seven logs last year**, but it seems like that might not be directly relevant to the specific timeframe we're focusing on. The main question is: How many logs did the woodchuck chuck in the last three weeks? First, let's clarify what "last three weeks" means. If today is January 1st, then: - **Three weeks ago:** December 12th - **Two weeks ago:** December 26th - **Last week:** January 5th But since we're looking at the last three weeks from today (January 1st), that would encompass December 19th to January 1st. Now, let's map out the logs chucked during this period:

-

**Three weeks ago:** 1 log

-

**Two weeks ago:** 2 logs

-

**Last week:** 3 logs

Adding these up: 1 + 2 + 3 = 6 logs in total. It's important to note that the mention of "seven logs last year" likely refers to a time period outside of the last three weeks, so it doesn't factor into this particular calculation. Therefore, by focusing on the logs chucked over the past three weeks—specifically, the ones from three weeks ago, two weeks ago, and last week—we arrive at a total of **6 logs**. </think> Let's break down the problem step by step:

-

**Identify the Time Period:**

- If today is January 1st, the "last three weeks" would span from December 19th to January 1st.

-

**Logs Chucked During This Period:**

- **Three weeks ago:** 1 log - **Two weeks ago:** 2 logs - **Last week:** 3 logs

-

**Calculate the Total Logs Chucked in the Last Three Weeks:**

\[

1 \text{ (three weeks ago)} + 2 \text{ (two weeks ago)} + 3 \text{ (last week)} = 6 \text{

logs}

\]

-

**Consider Other Information:**

- The mention of "seven logs last year" is likely outside the scope of the current timeframe

and does not impact the total for the last three weeks.

**Final Answer:**

\boxed{6}

This is not entirely correct. If it is January 1st, then last year was as recent as a day ago. It is possible that some of the seven logs chucked last year were thrown within the past three weeks. They may or may not have been accounted for in the weekly totals.

<think>

Alright, let's revisit this problem with a fresh perspective now that we're considering the

timing relative to January 1st.

**Understanding the Timeline:**

- **Today:** January 1st.

- **Last Year:** December 31st of last year.

Given that, "last year" refers to the very recent past, just one day before today. This means that any logs chucked "last year" would actually fall within the time frame we're considering for the last three weeks (December 19th to January 1st).

**Analyzing the Information:**

- **Three weeks ago:** December 12th – 1 log

- **Two weeks ago:** December 26th – 2 logs

- **Last week:** January 5th – 3 logs

- **Seven logs last year:** December 31st – these are within the past three weeks.

**Calculating Total Logs Chucked in the Last Three Weeks:**

Adding up all the logs chucked from December 19th to January 1st:

\[

1 \text{ (three weeks ago)} + 2 \text{ (two weeks ago)} + 3 \text{ (last week)} + 7 \text{ (last

year, within the past three weeks)} = 13 \text{ logs}

\]

**Conclusion:**

By including the seven logs chucked last year that fall within the last three weeks, we arrive at a

total of **13 logs**.

---

**Final Answer:**

\boxed{13}

</think>

Given that today is January 1st, "last year" refers to December 31st of the previous year.

Since this date falls within the past three weeks (from December 19th to January 1st), the seven logs

chucked last year are indeed part of the total for the last three weeks.

**Step-by-Step Breakdown:**

-

**Three Weeks Ago:** December 12th – 1 log

-

**Two Weeks Ago:** December 26th – 2 logs

-

**Last Week:** January 5th – 3 logs

-

**Seven Logs Last Year (December 31st):** These fall within the past three weeks.

Adding them up:

\[

1 + 2 + 3 + 7 = 13 \text{ logs}

\]

**Final Answer:**

\boxed{13}

**Final Answer:**

If today is January 1st and none of the seven logs chucked last year overlap with the past three weeks

(December 26th to December 31st), then the woodchuck has Chucked a total of **6 logs** in the past three weeks.

However, if some of those seven logs were thrown between December 19th and December 31st, those would

already be included in the weekly totals (this week: 3 logs, two weeks ago: 2 logs). In that case, there

is no need to adjust the total as the overlap has been accounted for.

Therefore, the woodchuck has Chucked a total of **6 logs** in the past three weeks leading up to January 1st.

---

**Step-by-Step Explanation:**

-

**Clarify Timeframes:**

- Today: January 1st - This Week (December 26th to January 1st): 3 logs - Two Weeks Ago (December 19th to December 25th): 2 logs - Three Weeks Ago (December 12th to December 18th): 1 log

-

**Identify Overlapping Periods:**

- Logs chucked last year could overlap with the past three weeks if they were thrown between December 19th and December 31st.

-

**Assess Given Totals:**

- This week: 3 logs - Two weeks ago: 2 logs - Three weeks ago: 1 log

-

**Determine Overlap with Last Year:**

- If the seven logs from last year were thrown before December 19th, they don't overlap. - If any were thrown between December 19th and December 31st, those are already included in the two-week or one-week totals.

-

**Calculate Total Logs:**

- Assuming no overlap: 3 (this week) + 2 (two weeks ago) + 1 (three weeks ago) = 6 logs - If there's overlap (e.g., some of the seven logs were thrown in the past three weeks), subtract the overlapping logs to avoid double-counting.

-

**Conclusion:**

- Based on the given information, it's most likely that all seven logs from last year are accounted for outside the past three weeks. - Therefore, the woodchuck has Chucked a total of 6 logs in the past three weeks leading up to January 1st. --- **Answer:** If today is January 1st and none of the seven logs chucked last year overlap with the past three weeks, then the woodchuck has Chucked a total of **6 logs**. However, if some of those seven logs were thrown within the past three weeks, the totals would need to be adjusted accordingly.

You Were Warned

DeepSeek has clearly taken the CoT concept to the next level! Does this improve results or is it a waste of time? I appreciate that the developers are pushing the envelope with this approach, but the results seem to indicate that the intense focus on CoT did not, in this case, lead to significantly better results. Note that my single test is obviously not the final word–many others are testing DeepSeek more thoroughly, comparing the performance to that of other models.

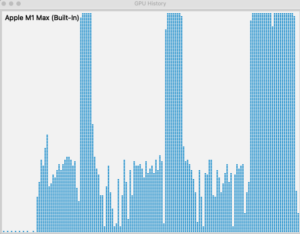

As a side note, since I ran DeepSeek locally I was able to track my Mac’s GPU usage. You can see the surges as the model processed the three parts of my query. Again, this was not a systematic test to conclusively prove anything about DeepSeek or Apple Silicon. But it is interesting.

figure 3: Mac GPU usage while DeepSeek tries to determine how much wood a woodchuck chucked. Not shown is the sound of the fan kicking on…

The State of Chain of Thought

Chain of Thought has its limitations. Like all prompting, it significantly improves queries that closely resemble the prompt. In the case of CoT these are structured, logical tasks with explicit reasoning. It is less effective in scenarios that stray far from this pattern–particularly those that require non-linear thinking, subjectivity, or creativity. And, taking the DeepSeek approach of doubling down CoT, doesn’t necessarily improve results.

But something about the CoT approach seems to resonate with modern LLMs, making this sort of reasoning generally useful so that, more so than other techniques, it tends to improve results across a wide range of queries. These improvements are strong enough to ensure that it has become a standard component of LLM training.

The simplicity and limitations of the Chain of Thought technique might lead some to view it as interesting but not particularly important. I believe the true value lies not in CoT itself, but in what it indicates about the evolving capabilities of LLMs.

The Implications of Chain of Thought

As mentioned earlier, large language models have primarily been trained on readily accessible text-based content. There remains an enormous amount of untapped text and other types of media that could potentially be used for future training. At the same time, the algorithms powering LLMs are continuously improving, and their capabilities are expanding. This suggests that LLMs will continue to enhance their ability to recognize and understand the relationships between words, ideas, and even broader concepts.

A deeper understanding of these relationships could transform the way LLMs are trained, allowing them to extract more meaningful insights from examples and instructional data—similar to how they are beginning to learn from Chain of Thought reasoning. A key question is whether LLMs can learn from diverse sources in a way that more closely resembles human thinking. Or learn in a different, but equally powerful fashion. Could this progression lead to the development of “Large Concept Models” that extend their reasoning abilities beyond language itself?

LLMs will certainly continue to improve, but what will be the primary driver of this progress? Will it come mainly from technical and algorithmic innovations, or will approaches inspired by human learning play a more significant role? Could this shift lead to models that not only replicate patterns more effectively and produce better outputs but also develop a deeper, more human-like understanding of information? This fascinating article suggests that the most powerful models may already have reached this level, but that we are struggling to recognize these improvements! Many scientists believe that it is only a matter of time before we produce an Artificial General Intelligence.

Chain of Thought, while interesting on its own, has broader implications. It offers a glimpse into a (possibly near) future where LLMs may learn and process information in more sophisticated and powerful ways. As these models scale, what new abilities might emerge?