In the first post on this topic, I discussed positional encoding in general and illustrated some of the challenges (opportunities?) unique to developing AI software—particularly, the difficulty in specifying ahead of time what you are trying to produce. With truly innovative work often a more empirical approach is required.

In this post, I will examine a couple of positional encoding algorithms in more depth and attempt to derive a set of specifications for a good algorithm. As this topic is still the subject of much research, the results are not crystal clear but still worth considering.

Absolute Positional Encoding

An obvious approach to the problem of positional encoding for sequences of words would be to represent the positioning of each word with a single, monotonically increasing value. A system using absolute numbering (1, 2, 3, …) is easy to implement, easy to work with, and easy to understand.

The only limit to the number of words that can be encoded is the number of bits dedicated to the encoding. Note that a solution like this would require only a single value to fully specify position. There is no obvious need for a vector of values.

Solutions like this have been attempted. Surprisingly, they have not (in the research I found) been proven to work effectively in neural networks, for a number of reasons.

One knock against this approach is that it emphasizes the absolute positioning of each word as opposed to the relative positioning of words. For instance, in an absolute scheme it is easy to determine what the fourth or fifth word is, but harder to figure out which words precede or follow the current word by four or five steps. Both types of information are potentially valuable.

Another disadvantage of an absolute system is that the numbers grow larger as the word context (set of surrounding words) scales. This can over-emphasize words at the start or end of the context, as neural networks tend to respond most to the smallest or largest values.

By default, absolute numbering generates numbers that are suboptimal for neural networks. Network calculations function best when all values are normalized to a range between -1.0 and 1.0 (or 0.0–1.0). Normalizing all the data ensures that no one piece of data (such as positional encoding) overwhelms other data. It also prevents the exploding gradient issue, where large numbers multiplied together many times grow far too large to be useful in the system.

Absolute numbering is easy for humans but not ideal for neural networks.

Sinusoidal Encoding

In the sinusoidal encoding algorithm specified in AIAYN, multiple curves of various frequencies are sampled to build a vector. For example, if the word vectors contain 512 elements, then 512 different curves will be sampled for the position vectors. Sinusoids are cyclical, but the combination of a high sampling rate of many different curves reduces the possibility of duplicate vectors.

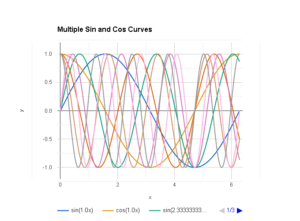

Figure 2: Multiple sinusoidal curves over the same period, created by Google Gemini

For example, consider the values for each of the eight curves at the positions marked 2 or 4 in Figure 2. You could use these samples to form a vector with eight different values at each point and specify the positions of the second and fourth words. In practice, many more sinusoids frequencies are sampled at a far higher frequency to produce the position vectors.

The combination of low- and high-frequency curves provide position information at multiple scales, which is useful for both absolute and relative positioning. Creating a position vector that is the same dimension as the word vector helps to avoid a single piece of position information getting lost in the noise, and makes it easy to combine the position data with the existing word information.

In this encoding scheme, the amplitude of the curves is restricted to a normalized range (-1.0 to 1.0). Normalization is commonly performed for all of the data in neural networks, as it has been shown to work well with these systems.

Overall, this sinusoidal algorithm provides the critical relative word positioning in a format that is better suited for neural network processing. While it is non-intuitive for humans, many systems have successfully used this encoding system, proving it out in practice.

Derived Requirements

Based upon the discussion of the two examples above, some idea of the requirements for a good positional encoding algorithm can be deduced.

The goal of the positional encoding is to nudge the word vector in a good direction. Unfortunately, what “nudge” and “good direction” mean is not yet well defined. Each encoding system must be tested to determine how well it works for the particular scenario (chatbot, language translation, etc.), using standard datasets and accepted benchmarks such as BLEU score.

Some required properties appear to be:

- The position information should be consistent across sequences of words (e.g., position 5 should always have the same representation).

- Each location must have a unique specification. The chosen system can cycle, but not so quickly that it causes duplication in position information.

- The position should be encoded in a format that works well for neural networks in general, and with the specific word encoding in particular. It should be in a normalized range, and match the vector length for the word embedding.

- Both relative and absolute positioning information should be present.

- The system should place more weight on words closer to the subject word (as opposed to the first and last words in the sequence).

With this information, we have a better ability to design good encoding systems or select among existing choices. It is still not as clear as a good product specification, but that is the nature of the work. If you love technology and are excited by the opportunity to create new algorithms, then this area, as in so much of AI, represents a fun opportunity.

Ongoing Research

I presented an easy and obvious but flawed algorithm and compared it against an accepted but complicated solution. But these are not the only two possibilities! Despite the fact that sampling of sinusoidal curves has some benefits and was specified in the initial transformer architecture paper, many other researchers have sought to understand why it works and how it could be improved upon.

Some of the original authors of AIAYN have proposed an alternative embedding scheme, which more strongly emphasizes the relative positioning in a sequence: Self-Attention with Relative Position Representations.

Other researchers have tried to leverage the power of neural networks to determine the optimal values for encoding position information. Given the ability of neural networks to optimize systems, and the fact that we don’t know exactly how to specify optimal position information ahead of time, this approach is reasonable. In the past when it was tried the results were no better than other algorithms. But new approaches are still being investigated: Learned positional embeddings.

Currently, one of the most popular ways of encoding positions, applicable to all sorts of spatial domains, is Rotary Positional Embeddings RoPE. This technique still encodes positions as a vector and is still conceptually challenging. But it seems to demonstrate improvements over sinusoidal and most other encoding systems.

More To Come

The small domain of positional encoding demonstrates some fundamental truths about the current state of AI development. The technologies are interesting and not always fully understood. They already produce amazing results, but there is still plenty of room for innovation and exploration.

With this in mind, I fully expect that AI applications will continue to improve over time as more of us work to improve the state of the art. There is plenty of room for both research into algorithms and old-fashioned software optimization and experimentation. We may well be in the Golden Age of AI.